How Crawl Optimization Improves Website Sustainability

In this post, we explore how crawl optimization impacts website sustainability and performance. We also touch on various tools and resources to address this issue.

We’re big fans of Yoast, an SEO plugin for WordPress. It is a very useful tool to help people quickly understand how they can optimize web content for search engines. We recommend it to our clients all the time.

So when Yoast started pitching their SEO plugin as an environmental solution, naturally our curiosity was piqued:

- Was this just greenwashing?

- How do they quantify the environmental benefits of using their software?

- What environmental claims can companies make by using the Yoast plugin?

Researching Yoast’s claims inspired us to dig deeper into the topic of crawl optimization for both business and website sustainability benefits. Read on to learn more about how to:

- Remove excess links, tags, and other information to reduce crawl data

- Improve website speed, navigation, and sustainability

- Quantify the results of good crawl optimization practices

What is a Bot Crawler?

It is believed that over 40% of all internet traffic is comprised of bot traffic, and a significant portion of that is malicious bots. This is why so many organizations are looking for ways to manage the bot traffic coming to their sites.

— What is Bot Traffic?, Cloudflare

First, let’s break down the basics. Bots, also referred to as web crawlers, are any non-human traffic to a website, app, or other digital product. They comb the web, following links, analyzing pages, and executing whatever functions they were designed to perform. These can include helpful tasks like:

- Page and content ranking for search engines

- Website health and performance monitoring

- Maintenance tasks, such as aggregating website content or checking backlinks

However, on a darker note, bots are also commonly programmed to perform a variety of nefarious tasks, such as spamming contact forms and injecting malicious code into websites via known security vulnerabilities.

This is why many organizations try to restrict bad bot traffic as much as possible while also supporting the helpful tasks listed above. Sometimes, this can be a tricky needle to thread.

What is Crawl Optimization?

Crawl optimization helps you support the good and weed out the bad bots. It is the process of optimizing various site settings to block known bad actors while also removing excess links, tags, and other metadata from a website to improve navigation, reduce load time, and help search engines better crawl your content.

Removing these extra elements—as long as you don’t break your website in the process—can reduce the amount of data that web crawlers need to sift through in order to accurately assess your website. There are also environmental benefits to this approach, which we’ll get to in a bit.

Crawl Optimization and SEO

Crawl optimization is useful for SEO because it helps search crawlers better navigate your website and provide more relevant results. This is especially true for very large websites.

To clarify, Google allocates a crawl budget to better manage the computing resources it uses on each site. Crawl optimization can help maximize the crawl budget of large websites, as a high page count can adversely impact indexing.

Also, the faster Googlebot or other search crawlers can index a page the more pages it can fit within a site’s allotted crawl budget. Hence, there is a good case to be made for website performance optimization as well as improving the crawl settings described in this post.

Crawl Optimization and Bad Bots

The Imperva Bad Bot Report estimated that 42.3% of all internet activity in 2021 was bot traffic. Bad bots can interfere with an organization’s business goals in a variety of ways:

- Click Fraud: Advertising click fraud can significantly drive up costs to advertisers while providing no meaningful value.

- Form Spam: Bots can spam contact forms, comment sections, and even search bars with scam messages.

- Advertising: Bots can also be used to propagate information that promotes illegal and ethically questionable activities. This can damage your brand and expose you—and your audience—to malware in extreme cases.

Similarly, crawl optimization can be used to improve security and data privacy as well. For instance, you might also want to block specific IP addresses (or a range of them) because entities associated with those addresses are spamming or otherwise attacking your website.

A Content Security Policy (CSP) offers another form of crawl optimization. CSPs prevent clickjacking, cross-site scripting, and other code injection attacks by directing website browsers to only execute code from specific sources designated by the CSP. These attacks can be used for everything from distributing malware to defacing sites and data theft.

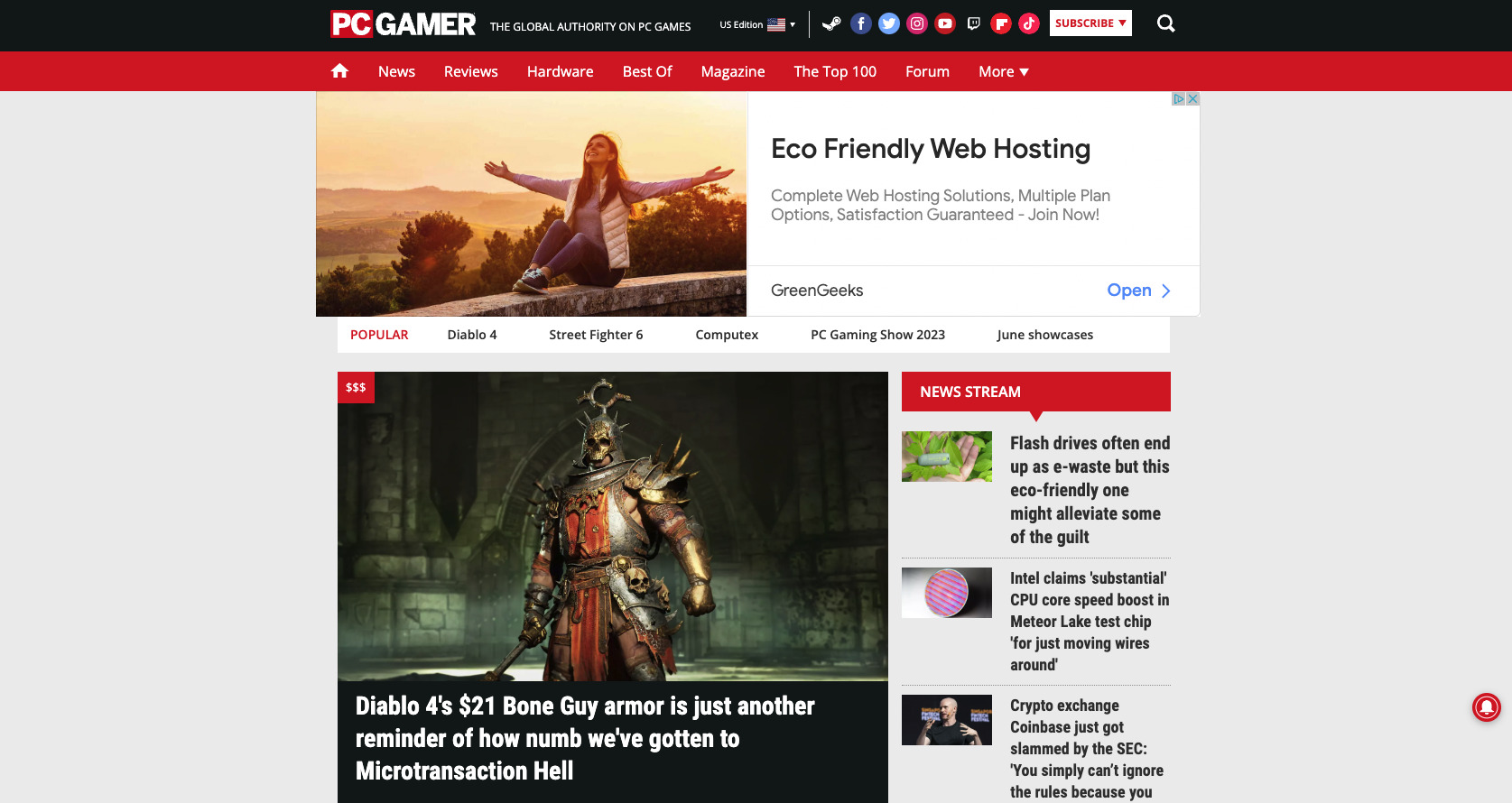

More importantly, identifying and discounting likely bot traffic is a prerequisite for proper data analysis, as it can skew any meaningful reporting you hope to do. This can adversely affect any number of data points, from search performance to click-through rates and so on.

Since malicious bots make up a large portion of overall internet traffic, it is in any organization’s best interest to defend your website against as many of these bad bots as possible.

Sustainability Challenges with Bots and Web Crawlers

There are also substantial environmental concerns for unchecked bot traffic. Every time a bot crawls a URL, websites use energy to serve requested data.

This is not a significant amount if it’s a single bot visiting a single page. However, website URLs are crawled by bots constantly. Plus, many websites get a lot of traffic. For example:

- The homepage of a media- and traffic-heavy website, like PCGamer.com, might emit an estimated 7g of carbon dioxide equivalent (CO2e) per visit.

- However, what if the website has thousands—or tens of thousands—of pages?

- Similarly, according to SimilarWeb, the PC Gamer website receives around 20-30 million visitors per month.

Based on these figures, you can see how emissions associated with this website can quickly add up. Since bots make up a large portion of website traffic, you can also see how restricting this traffic can significantly reduce the site’s environmental impact.

Quantifying the Environmental Impact of Web Crawlers

Quantifying this impact is more challenging. The internet is a complex ecosystem. Much of the digital supply chain data you need to accurately estimate emissions associated with bot traffic lives in closed third-party systems that you probably don’t have access to.

For broad stroke estimates, you might apply the blanket Imperva number of 42.3% of your overall traffic coming from bots. However, this won’t reflect your organization’s unique setup and structure, so accuracy is questionable. Plus, as we established above, not all bots are bad bots. However, it’s a place to start in the absence of anything else if you need to do some quick napkin math.

You can also turn to other data sources to glean more accurate information. There are pros and cons to each approach. These data sources might include:

- Analytics Data: Google Analytics and related tools provide in-depth traffic analysis, though you will need to make some adjustments to identify bot traffic in these reports.

- SaaS Tools: Web services like Cloudflare or CheQ Essentials have specific tools to help you understand and manage bot traffic, though this comes with service fees.

- Your IT Team: Engineers can review network requests to identify likely bot traffic, though this is time-consuming, especially for an already overwhelmed IT team.

Either way, without performing a full digital life cycle assessment, the best you can hope for is proxy data similar to that provided by Ecograder, Website Carbon, or related tools. Still, it’s a place to start.

Crawl Optimization Tools & Resources

With this being said, there are a number of tools at your disposal to implement crawl optimization practices and improve website sustainability.

Robots.txt

With a robots.txt file, you can instruct search engine bots to ignore pages that you do not need indexed or even crawl your site less frequently. This can be done by adding rules to a robots.txt file at the root of your server. Good crawlers will read this file when they visit your site and abide by the rules you’ve designated.

Examples to mark for exclusion are:

- Site administration pages

- PDF files

- Private pages that should only be accessed by a unique URL

- Decorative imagery

- Any other page you don’t want to show up in search results

Link Cleaning Tools

You can use link cleaning tools to clean and organize lists of URLs in pages by removing duplicates, sorting them, and validating their format. Examples include:

Site Error Tools

Making use of tools for resolving 404s may not reduce the number of URLs being crawled but they can reduce the number of crawls being conducted on invalid pages, while also offering possible gains in SEO performance. Examples include:

CMS Tools

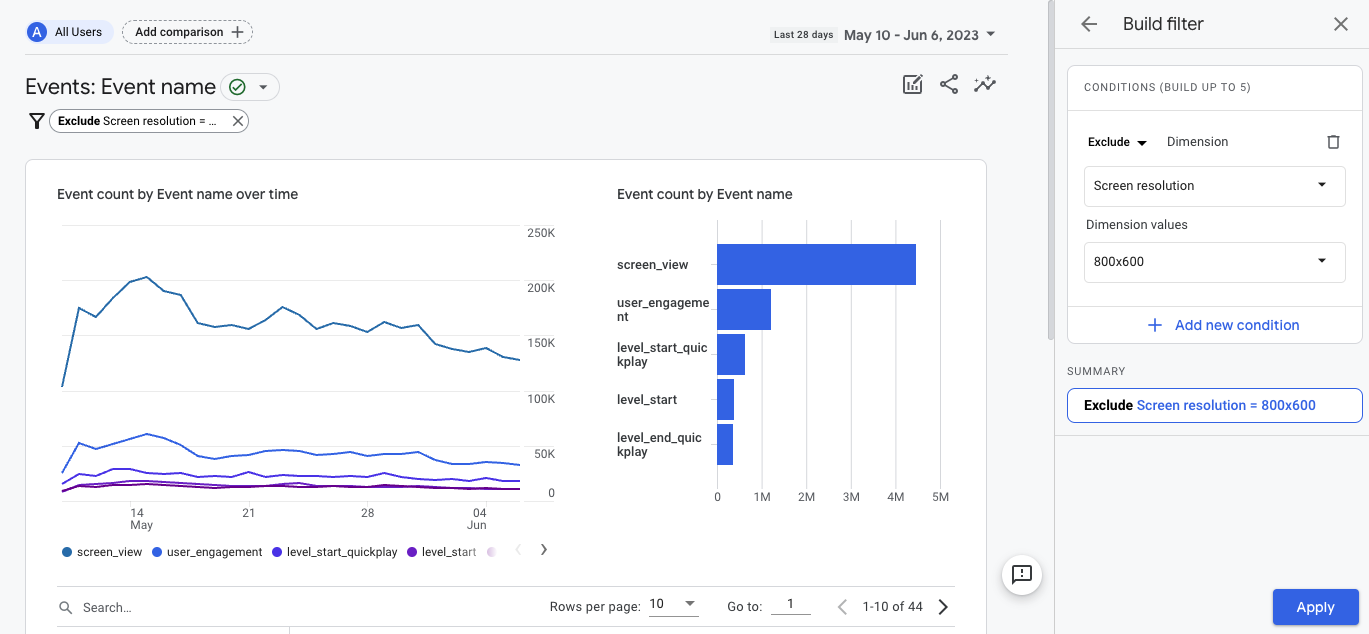

If you use a CMS like WordPress, Wix, Squarespace, etc, chances are your website has unused functionality which adds to the amount of data transferred when bots crawl your website.

Some CMS platforms add URLs or code that crawlers will follow and read. This extra data can range from comment feeds to shortening links and URLS for APIs to interact with. Oftentimes, you may not even be aware of what’s built into your CMS, as most of this action happens behind the scenes.

That is where there is the potential for tools such as Yoast’s new bot crawl optimization features to reduce emissions due to crawlers by eliminating unused URLs for WordPress websites. Here are some other examples:

- Crawl Optimization plugin for WordPress helps remove unnecessary links and information from your HTTP headers.

- The Cloudflare plugin provides a host of optimizations and can offer a level of protection against certain WordPress threats and vulnerabilities that bad bots might try to exploit or block them altogether in some cases. This is available with a paid plan.

- Smartcrawl SEO for WordPress is a feature-rich SEO plugin that gives users the ability to audit and improve their technical and semantic SEO.

- Yoast recently added a crawl optimization tool to their flagship SEO plugin. More on this below.

Reducing Site URLs

Perhaps the greatest tool at your disposal is simply to reduce the number of URLs you create. Consider the following to get started:

- Media Library: Audit your media library before adding new images to ensure that you do not add duplicate content.

- Blog Posts: Refresh old blog posts instead of creating new posts on the same subject. Use content clusters and a content audit to reduce and better organize website content.

- Slugs: Avoid changing slugs—the unique identifying part at the end of any URL—unless you have a good reason. (If you must, create meaningful redirects to improve user experience.)

Yoast’s Crawl Optimization Features

Yoast’s plugin allows website owners to remove features present in WordPress that create URL bloat, such as:

- Shortlinks: a shortened version of a page URL

- REST API links: a feature that allows APIs to interact with your site (WordPress does this automatically)

- Embed links: a link that uses anchor text to create a link to another webpage

- Post comments: adds URLs to a post comment feed

- Category feeds: provide information about your recent posts for each category

- And more . . .

The ability to remove unused features is potentially powerful functionality for website owners. Yoast explains what each toggle in the optimization section does. However, depending on your tech stack, this tool can break your website if not used properly. For example, if your ecommerce processes depend on a REST API link, removing that link type could cause serious issues.

When using Yoast’s tool, be certain that the features you remove are extraneous and not something your website needs to operate. This is especially true of the advanced settings.

Perhaps most importantly, the defining principle of this tool is applicable beyond Yoast and even WordPress. Site owners, developers, marketers, and web teams should ensure that the sites they work on only use necessary functionality to perform key functions.

Related, Yoast’s Eco-Mode for WordPress Servers from the Cloudfest Hackathon looks like a promising addition to the growing list of website sustainability tools and resources. It would be great to see them more clearly quantify the environmental benefits of these tools so product teams can better benchmark their website sustainability efforts.

Page Optimization to Reduce Crawl Resources

Finally, we should also make sure that our pages are as lean and load as little data as possible. In addition to decreasing crawl load, this will improve page performance and responsiveness and may positively impact your search rankings. Here are some resources to help you get started:

- Web Accessibility Webinar 30 Things to Know (Because accessible code is clean code)

- Minifying or Munging Code for Faster Website Page Speed

- How to Optimize Images for Faster Load Times and Sustainability

- What is Page Speed and Why Does it Matter?

- Technical Debt, Agile, and Sustainability

For a full list of tactics to make your website more sustainable, see our sustainable web design post.

Can Crawl Optimization Help Organizations Meet Website Sustainability Goals?

The crawl problem is a compound problem. It’s not a single cause, but a combination of search engines crawling too often, hackers constantly going around the web to find vulnerable websites, and the fact that sites have way more URLs than they realize…

— Taco Verdonschot, Head of Relations at Yoast via The WP Minute

No individual website will fix the internet’s sustainability issues. However, there are compelling business reasons to consider implementing crawl optimization practices to improve website sustainability, search performance, and help your organization create a more sustainable SEO strategy.

Spend the effort to minimize crawl assets, block bad bots, and make your website more crawl-friendly. This will reduce feature bloat, improve security, and speed up your website, resulting in happier users, improved conversion rates, and reduced costs. What business doesn’t want that?

Digital Carbon Ratings, now in Ecograder.

Understand how your website stacks up against industry carbon averages with this new feature.

Try Ecograder