How A/B Testing Improves Websites

Here’s how a clear A/B testing strategy can help you make better data-informed decisions, provide better website experiences for customers, and help you more effectively meet business goals.

How well do you know your website users? In any line of business, it is common to form opinions about what customers want based on our own experience. However, these assumptions are often emotion-based or driven by subjective observation rather than by objective data. A/B testing uses hard data to help you answer whether or not these assumptions are actually true.

What is A/B Testing?

A/B tests provide simple ways to test web page changes against the current design in order to determine which elements produce positive results. A/B tests offer ways to validate a change ahead of time, so that you know that it will improve your conversion rates before you alter site code.

— Dan Siroker, Co-Founder, Optimizely

Web pages are collections of elements grouped together for a single purpose. In a perfect world, that purpose serves both your business goals and your users’ needs. For example, you may want users to:

- Learn something new

- Buy a product or service

- Download a document

- Watch a video

- Sign-up for a newsletter

However, your users might have different goals. They might just be browsing or searching for something else entirely. One useful way to test whether or not your digital product actually serves user needs is to implement an A/B testing program.

A/B testing is the process of comparing performance data for two variations of a single element to learn which drives more user interactions. This helps you figure out whether or not page elements effectively contribute to a page’s overall purpose.

More importantly, A/B testing is part of a larger conversion rate optimization strategy that can be used to improve user experience, and overall website performance while also helping you better meet your business goals over time. It is a useful tool in designing a sustainable data strategy for your organization.

At Mightybytes, we have run hundreds of A/B tests for clients and see firsthand the value this approach can bring to improving digital products long-term. For the right organization, it is a worthwhile investment.

A/B Tests vs. Multivariate Tests

Lots of managers run sequential tests — e.g., testing size first (large versus small), then testing color (blue versus red), then testing typeface (Times versus Arial) — because they believe they shouldn’t vary two or more factors at the same time.

— Kaiser Fung, Harvard Business Review

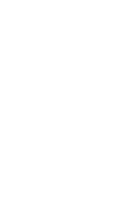

Notably, A/B tests typically compare a single variation—A vs. B, hence the name—of any given website or product component. For example, you might test a blue button versus a red button to see which gets more clicks. Or you might change call-to-action (CTA) text on that button for the same purpose.

On the other hand, multivariate tests compare multiple metrics at once. You might test button color, font, size, and copy in a single test. Or you might test completely different page components, like a form and a hero image, in a single test.

Depending on the metrics you choose to measure, it can be difficult to know which specific element drove the most improvement in a multivariate test. In the example above, was it button color? Text size? Font choice? Button copy? Or a combination of these? For the purposes of this post, we’ll focus on A/B tests.

A/B Testing in Practice

How does A/B testing actually work? First, it’s important to understand that webpage performance can always be improved. Marketers who regularly test website performance using methods like A/B testing better understand how to accommodate their users. A testing mindset encourages experimentation and continuous learning. To run an effective test:

- Split the Elements: Test variants—A and B—are split upon page load to site users. This is often a 50/50 split, though most tools allow you to adjust percentages or run more than two variations.

- Set the Time Frame: The test is run for a predetermined amount of time—usually two weeks to a month, though if your site gets heavy traffic this can be shorter. See six-day example below.

- Analyze the Data: Whichever variation performs better during the test gets permanently added to the site—or at least until that component becomes part of another A/B test.

With all this said, here are a few important points to consider before devising an A/B testing strategy.

Know Your Users (And Their Pain Points)

Before you can effectively incorporate quantitative A/B testing into ongoing website improvements, it will help to clearly understand your users and their pain points. This entails doing specific qualitative user research to uncover key insights. It is common to complete at least some of this research when creating a product or redesigning a website.

Here are three things you can do to better understand user needs:

- User Personas: Persona exercises can help you reach consensus on common traits users have. However, user personas are often based on assumptions or aggregated data and can be way off target. Validate (or refute) those assumptions prior to running any tests.

- Qualitative Interviews: Conducting user interviews can help you validate the assumptions in your personas, get useful feedback on an existing product or service, or learn whether a specific feature or content idea will resonate with potential customers. Interview at least five to eight users under each persona type for better data and deeper insights with which to make testing decisions.

- User Testing: If you’re trying to uncover specific insights in a user interview, it helps to give them something to interact with. You can tree test navigation ideas, share feature prototypes, or merely show wireframes to help users understand key product components.

Accessibility as a Baseline

Related, everything you test should follow current accessibility standards. Accessible solutions are more inclusive and work for the widest audience possible. Users with disabilities comprise up to 20% of internet users, so alienate them at your own risk. Accessibility isn’t a requirement for A/B tests, but it’s the smart and ethical thing to do. Plus, you could get slapped with a lawsuit if your content isn’t accessible.

Get Comfy With Statistics

Next, you should be comfortable with statistical analysis. If you don’t know how to interpret test data or properly configure tests to pull the right data, it may be hard to get good, actionable results. Remember, garbage in = garbage out, so make sure the tests you run have clear goals that are aligned with your business strategy and users’ needs.

Also, there is typically a margin of error in A/B tests, so consider that when interpreting results. If you run an A/B test and one variant wins by 2% with a margin of error of 1.5%, it is completely possible that the two variants are roughly comparable.

In this case, the statistics don’t definitively offer one winner over another. This doesn’t mean the test was a failure. It might just mean that your two variations aren’t different enough from one another to show statistically significant improvement. Create a new option and try again.

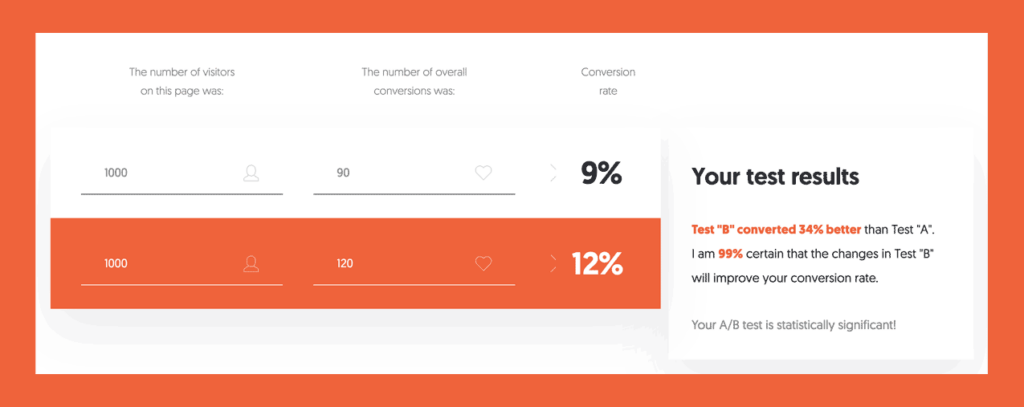

Statistical Significance in A/B Tests

Related, your site will need enough traffic to effectively run these tests. If only 50 people visit your site during the duration of a test cycle, the validity of your numbers may be questionable. In other words, the more visitors your site has, the more clearly you’ll know which variation has a greater impact on conversion rates.

To obtain the volume of data needed for actionable insights, it is common to dovetail A/B testing efforts with dedicated advertising or social media campaigns. For example, if your organization is running a test to increase donations on a certain landing page, it makes sense to drive as much qualified traffic to that page as you can during the test’s duration.

Moreover, the line of statistical significance is not always clear. Any number of factors can impact your tests. This A/B Testing Significance Calculator from Neil Patel can help you get more out of your A/B tests.

Common A/B Testing Questions

While A/B testing for digital products has been around for some time, it is a new way for many to measure the success of their website, mobile app, or other product. Here are some common questions that come up for new testers:

Which Website Elements Are Good for A/B Testing?

You can A/B test any user-facing element of your website. Some of the most commonly tested items are:

- Button placement, colors, copy, etc.

- Headline copy, font size, etc.

- Product pricing

- Product photos and placement

- Interactive elements like sliders, checkboxes, toggle switches, popup boxes, etc.

- Call-to-action language or placement

- Form fields

Per the recommendations above, prioritize testing items that directly relate to your business goals and your users’ needs. That is where you will capture the most impactful data.

What A/B Testing Tool Should I Use?

There are plenty of A/B testing tools available. Here are several common solutions:

- Optimizely: A digital experience platform that offers a variety of products and services grounded in experimentation and A/B testing.

- Google Optimize: A free A/B and multivariate testing tool that uses advanced statistical modeling and is made to work with Google’s other platforms, such as Ads and Analytics.

- VWO: An A/B testing and conversion rate optimization platform tailored specifically for enterprise brands.

- Adobe Target: An omnichannel testing solution that provides insights across digital channels, including your website.

- Crazy Egg: Another platform that, in addition to A/B testing, offers heat mapping and other usability testing tools.

At Mightybytes, we have used Optimizely, Target, Crazy Egg, and Optimize in the past. Most of our current tests are done using Optimize because of its integration with other Google tools, and Target because it is a client’s preferred platform.

When’s a Good Time to Start A/B Testing?

Most tools make it easy to get started quickly, often without programming expertise. In other words, you can start a testing program any time, like tomorrow or next Thursday. That said, uncertainty is often at its highest during a redesign or when new features are added to a product. A/B testing offers opportunities to try out new things that can inform redesign options or new features:

- Adding New Features: Not sure about which options to include in a new feature? Test options by incorporating some of them into an existing live feature.

- Website Redesigns: Is your website redesign part of a larger organizational rebrand? Test elements like logos, taglines, and so on to see how they perform against existing assets.

Common A/B Testing Challenges

Here are some common challenges we’ve run across during our years of A/B testing website content and features:

- Product Owner Hesitation: Sometimes, website or product owners are unwilling to make markedly different changes to page components for fear that they’ll lose customers, reduce revenue numbers, etc.

- Component Configuration: Setting up accurate tracking in different environments, like Popup Maker, various form plugins, Google Tag Manager, and the tools mentioned above can make getting the right data in the right place challenging.

- Interpreting the Data: Sometimes the numbers don’t show a clear winner, leading to analysis paralysis about what to do next.

An A/B Testing Example

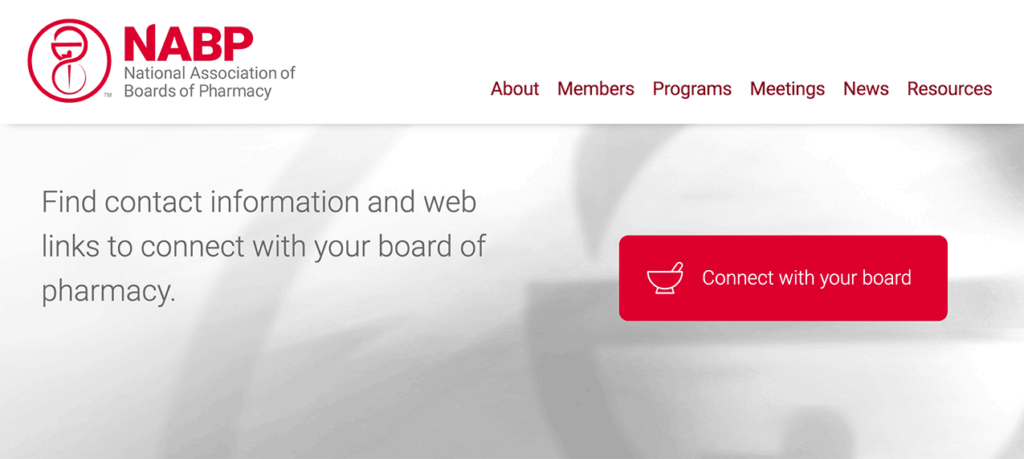

Here is a simple A/B testing example. Shortly after a website redesign project, our client, the National Association of Boards of Pharmacy (NABP), wanted to test their new homepage banner to learn which messaging might drive more user engagement.

The Original Banner

NABP’s original homepage banner invited users to connect with their local board of pharmacy. The tagline—which read “Find contact information and web links to connect with your board of pharmacy.”—was focused on a specific user type. The banner also featured a large button which read “Connect with your board”.

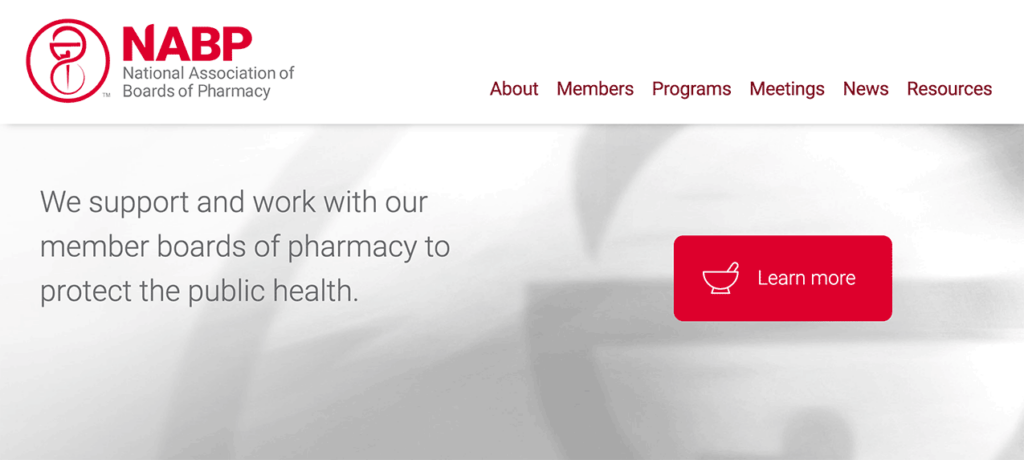

The Variant

The new banner variant featured a tagline which read “We support and work with our member boards of pharmacy to protect the public health.” It also included a button that read “Learn more”.

The Test

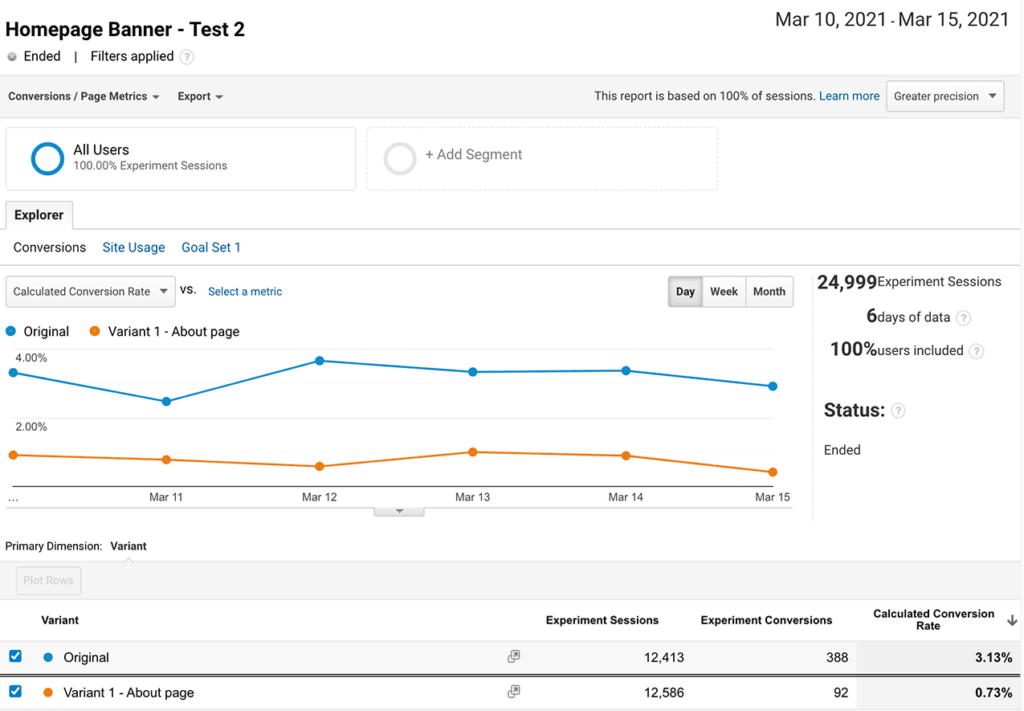

In this test, we tracked the number of button clicks each banner received. Because of the high traffic numbers NABP’s website receives, we were able to reach statistical significance quickly (around 24-hours).

We let the test run for six days, which is short for an A/B test. However, thanks to NABP’s high traffic numbers, we were able to quickly confirm that the original banner created more engagement. The calculated conversion rate for the original banner was more than 3%, while the variant came in at under 1%.

If NABP wanted to further test options, they might experiment with different button copy, colors, contrast, size of both button and tagline, and so on to learn which options provide the most engaging experience for their website users.

A/B Testing: Final Word

Many websites and other digital products are built on assumptions made by the people who own or build them. A/B testing takes the guesswork out of learning what content will resonate with your audience. With a robust testing program in place, you shouldn’t have to redesign your website every 3-5 years. Instead, you can make continuous improvements to better engage users based on test data, increasing your product’s longevity and measurably improving results over time.

If you want to learn more about how Mightybytes uses A/B testing to help our clients improve product performance and make better data-driven marketing decisions over time, check out our conversion rate optimization services.